Project Soli is the wrong side of Pixel 4

Over the last year or so, Google have transformed themselves from a leaky ship when it comes to new devices, into a full scale logistics…

Over the last year or so, Google have transformed themselves from a leaky ship when it comes to new devices, into a full scale logistics company transporting salt water around the globe with their fleet of pre-announcement shipping tankers. A recent post on their Keyword blog not only admitted upcoming features for their new flagship phone, but also gave us a cut-away glimpse at the internals along that unsightly and much criticised forehead.

In a way, this was a smart move to avoid the device being written off before it is even announced — the Pixel 3, for example, was subjected to much ire thanks to leaks detailing its obnoxious notch on the XL model and it could be argued this was one of the reasons sales of the phone have been so disappointing. This new announcement from Google gives the Frankenstein forehead a reason to exist before it suffers the same derisory fate as its older brother. Indeed, the technology inside that forehead is rather impressive and may be a chance for Google to start competing with Apple in terms of hardware rather than relying on its software leverage; not only will the Pixel 4 give us a genuine chance at a FaceID competitor on an Android phone, but it also claims to let us play with our phones hands free through the use of Google’s special Project Soli chip, which allows the phone to detect fine movement without physical contact with the device. It’s a clever little chip that could give the Pixel a chance to stand out from the crowd of clone devices on the shelf and give Google the right to claim itself a serious OEM intent on providing the best hardware for the public and not just the best software for its partners. They are branding it as Motion Control.

The only problem is that it’s on the wrong side of the phone.

Firstly, (and this might sound obvious so bear with me) putting Motion Control on the front of the phone means it will only sense movement in front of the phone — you know, in front of the screen. So when we try to do anything involving Motion Control, we’ll be blocking part of the screen with our hands — more than just touching the screen, thanks to your hand being further away from the screen and taking up more of the field of view.

So why not just touch the screen? Touching the screen would be more accurate that hovering our hands vaguely in space. If we do any movement above the screen with Motion Control, then what happens on the screen as a result will only ever be an analogue rather than direct manipulation of the object. It’s the same reason why kids prefer to touch the computer screen rather than move a mouse — apart from with Soli you don’t even have anything physical in your hands, making the disconnect even more apparent. Apart from building a theremin, there’s going to be few use cases where hovering your hands somewhere above a screen is going to be more comfortable than actually touching the screen.

Secondly, possible actions using Motion Control could end up invisible to the user and thereby go unused. The example Google have given us (swiping above the screen to switch tracks on a playlist) can not only be performed just as well (and possibly more reliably) by swiping directly on the screen, but there is going to have to be some sort of visual indicator on the screen to tell us that such a move is going to be possible — or do they just expect us to wave our hands above a screen (or twist our fingers like turning a crown on a watch) just to see if anything happens? That was the mistake Apple made with 3DTouch (I’m not using its other name, for issues of child protection). 3DTouch had loads of potential — from judging the weight of fruit to giving us more control over the acceleration in racing games — but its main use (to bring up extra menus, like a right click on a mouse) was hidden to the user, who had no idea if pressing harder on their glass screen to the point it would crack would bring up that vital menu or not. Was the menu there at all, or are you just not pressing hard enough? Go on, force that phone into submission with your clamp-like steel grip.

Speaking of Apple, this brings us to the third point: if Apple (with their full control of hardware and software, walled garden and loyal band of fanboys/girls/sausages) couldn’t make 3DTouch a success, how can Google? Google have control over one single line of (not particularly well-selling) hardware, but there are literally thousands of other Android devices out there that are NOT going to have Google’s proprietary Soli chip — meaning Motion Control can only ever be used to duplicate an interaction already present in the app, rather than adding a genuine new feature. Even if genuine use-cases are found, are developers really going to add Motion Control to their apps for a niche feature of one minor OEM? Even Google’s own successful communication app (yes, one does exist among the many hundreds of failed attempts) Duo fails to take advantage of the Pixel 3’s wide-angle front facing camera. If Google don’t bother supporting niche features of their own devices, why would any other developer?

This way, Motion Control in the Pixel 4 is going to end up as little more than a gimmick.

Well, I could do better than that.

Motion Control needs to be on the back of the phone, not just on the front.

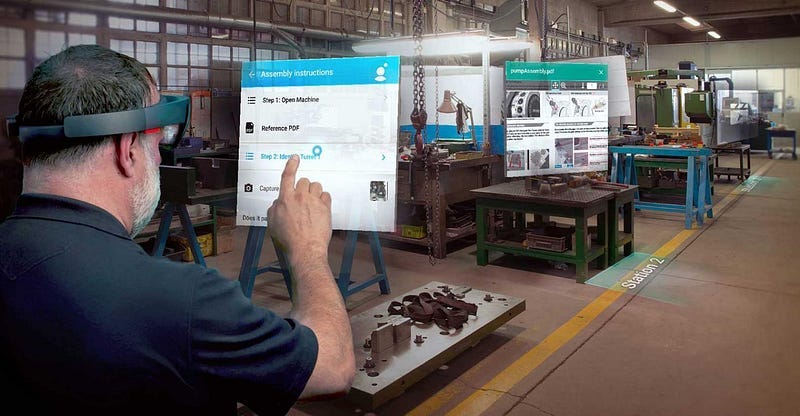

It all comes down to AR. Google, Apple, Facebook — and most of the up-and-coming titans of tech — are all ploughing resources into developing VR and its more interesting cousin, AR. Right now, Android ARCore is used for little more than games and Marvel superheroes superimposed onto your photos — but it has the potential to do so much more.

What if you could reach out and interact with the AR images on the screen in real space? Shake Ironman’s hand; pet a Pokemon; move virtual furniture; pop your phone into a Daydream headset and interact with a virtual keyboard with your fingers rather than having to using that pokey stick controller thing that comes with it today.

This sort of fine-motion control could be possible with the Soli chip and Motion Control. Our hands won’t be in the way of the screen; we can see the things with which we are interacting and our actions can correlate with them ‘directly’; and Google controls the ways most users already interact with AR and VR through Daydream and the Camera app meaning they can embed Motion Control into those apps immediately. Moreover, it makes Motion Control a genuinely useful addition to something they have already invested in, and which could make that offering a more compelling USP, rather than just another low-level gimmick ready to be forgotten. As it develops, gaining power and accuracy, we could end up running an entire virtual desktop set-up from our mobile phones.

Or, you know, we could just wave at our phones occasionally.